Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Official Computer Talk Thread

- Thread starter bootyluvr

- Start date

- Thread starter

- #4,022

- Thread starter

- #4,023

- Thread starter

- #4,024

Sorry @Honda-Fan didn't mean to tag you 3 times above...

Honda-Fan

Well-Known Member

- Staff

- #4,026

- 10,437

- 7,079

- Vehicle Model

- Toyota Tacoma TRD Sport

- Body Style

- @bootyluvr hates hatches

I like the hair description.....

Janz3n

Well-Known Member

- Staff

- #4,028

- 57,214

- 24,355

Does it give you a blue screen of death if you try to reboot the machine?

- Staff

- #4,029

- 57,214

- 24,355

There is actually some good info in this support thread if you get a blue screen at boot every time.

https://answers.microsoft.com/en-us...c000021a/764d25ea-7122-46aa-8acf-9b8de31d6c92

https://answers.microsoft.com/en-us...c000021a/764d25ea-7122-46aa-8acf-9b8de31d6c92

Janz3n

Well-Known Member

Janz3n

Well-Known Member

oh hi, havent turns my computer on in weeks.

- Staff

- #4,032

- 10,437

- 7,079

- Vehicle Model

- Toyota Tacoma TRD Sport

- Body Style

- @bootyluvr hates hatches

Apparently Charlotte is getting their first micro-center stores......

- Staff

- #4,033

- 57,214

- 24,355

Ohhhh booty’s fav store

- Thread starter

- #4,034

Yup I saw Mike's FB post a week ago or so about it.Apparently Charlotte is getting their first micro-center stores......

- Thread starter

- #4,035

@FooBird - I sold your old NZXT X53 AIO CPU cooler over the weekend. I never ended up using it. Posted it on FB Marketplace and this guy met me at a local police station to buy it.

I posted up the Corsair SFF PSU and had a few replies but no one followed through.

I posted up the Corsair SFF PSU and had a few replies but no one followed through.

My ancient 6 month old Dell Latitude for work **** the bed. I’m on a loaner for the next couple of weeks.

- Staff

- #4,037

- 57,214

- 24,355

does your police station use clearview? It says over it's been used by police over a million timesguy met me at a local police station to buy it.

===============

Clearview AI scraped 30 billion images from Facebook and gave them to cops: it puts everyone into a 'perpetual police line-up'

- Clearview AI scraped 30 billion photos from Facebook to build its facial recognition database.

- US police have used the database nearly a million times, the company's CEO told the BBC.

- One digital rights advocate told Insider the company is "a total affront to peoples' rights, full stop."

A controversial facial recognition database, used by police departments across the nation, was built in part with 30 billion photos the company scraped from Facebook and other social media users without their permission, the company's CEO recently admitted, creating what critics called a "perpetual police line-up," even for people who haven't done anything wrong.

The company, Clearview AI, boasts of its potential for identifying rioters at the January 6 attack on the Capitol, saving children being abused or exploited, and helping exonerate people wrongfully accused of crimes. But critics point to wrongful arrests fueled by faulty identifications made by facial recognition, including cases in Detroit and New Orleans.

Clearview took photos without users' knowledge, its CEO Hoan Ton-That acknowledged in an interview last month with the BBC. Doing so allowed for the rapid expansion of the company's massive database, which is marketed on its website to law enforcement as a tool "to bring justice to victims."

Ton-That told the BBC that Clearview AI's facial recognition database has been accessed by US police nearly a million times since the company's founding in 2017, though the relationships between law enforcement and Clearview AI remain murky and that number could not be confirmed by Insider.

Representatives for Clearview AI did not immediately respond to Insider's request for comment.

What happens when unauthorized scraping happens

The technology has long drawn criticism for its intrusiveness from privacy advocates and digital platforms alike, with major social media companies including Facebook sending cease-and-desist letters to Clearview in 2020 for violating their user's privacy."Clearview AI's actions invade people's privacy which is why we banned their founder from our services and sent them a legal demand to stop accessing any data, photos, or videos from our services," a Meta spokesperson said in an email to Insider, referencing a statement made by the company in April 2020 after it was first revealed that the company was scraping user photos and working with law enforcement.

Since then, the spokesperson told Insider, Meta has "made significant investments in technology" and devotes "substantial team resources to combating unauthorized scraping on Facebook products."

When unauthorized scraping is detected, the company may take action "such as sending cease and desist letters, disabling accounts, filing lawsuits, or requesting assistance from hosting providers" to protect user data, the spokesperson said.

However, even despite internal policies, once a photo has been scraped by Clearview AI, biometric face prints are made and cross-referenced in the database, tying the individuals to their social media profiles and other identifying information forever — and people in the photos have little recourse to try to remove themselves.

Residents of Illinois can opt out of the technology (by providing another photo that Clearview AI claims will only be used to identify which stored photos to remove) after the ACLU sued the company under a statewide privacy law, and succeeded in banning the sale of Clearview AI technology nationwide to private businesses. However, residents of other states do not have the same option and the company is still permitted to partner with law enforcement.

'A perpetual police line-up'

"Clearview is a total affront to peoples' rights, full stop, and police should not be able to use this tool," Caitlin Seeley George, the director of campaigns and operations for Fight for the Future, a nonprofit digital rights advocacy group, said in an email to Insider, adding that "without laws stopping them, police often use Clearview without their department's knowledge or consent, so Clearview boasting about how many searches is the only form of 'transparency' we get into just how widespread use of facial recognition is."CNN reported Clearview AI last year claimed the company's clients include "more than 3,100 US agencies, including the FBI and Department of Homeland Security." BBC reported Miami Police acknowledged they use the technology for all kinds of crimes, from shoplifting to murder.

The risk of being included in what is functionally a "perpetual police line-up" applies to everyone, including people who think they have nothing to hide, Matthew Guariglia, a senior policy analyst for the international non-profit digital rights group Electronic Frontier Fund, told Insider.

"You don't know what you have to hide," Guariglia told Insider. "Governments come and go and things that weren't illegal become illegal. And suddenly, you could end up being somebody who could be retroactively arrested and prosecuted for something that wasn't illegal when you did it."

"I think the primary example that we're seeing now is abortion," he continued, "in that people who received abortions in a state where it was legal at the time, suddenly have to live in fear of some kind of retroactive prosecution — and suddenly what you didn't think you had to hide you actually do have to hide."

Photos can come from anywhere on the web

Even people who are concerned about the risk of their photos being added to the database may end up included through no fault of their own, both Seeley George and Guariglia said. That people may end up in Clearview's database, despite Facebook's policies against scraping or their own personal security measures, is an indicator that privacy "is a team sport," Guariglia told Insider."I think that's one of the nefarious things about it," Guariglia said. "Because you might be very aware of what Clearview does, and so prevent any of your social media profiles from being crawled by Google, to make sure that the picture you post isn't publicly accessible on the open web, and you think 'this might keep me safe.' But the thing about Clearview is it recognizes pictures of you anywhere on the web."

That means, he said, that if you are in the background of a wedding photo, or a friend of yours posts a picture of you together at high school, once Clearview has snapped a picture of your face, it will create a permanent biometric print of your face to be included in the database.

Clearview and law enforcement

Searching Clearview's database is just one of many ways law enforcement can make use of content posted to social media platforms to aid in investigations, including making requests directly to the platform for user data. However, the use of Clearview AI or other facial recognition technologies by law enforcement is not monitored in most states and is not subject to nationwide regulation — though critics like Seeley George and Guariglia argue it should be banned.Representatives for the FBI, Department of Homeland Security, Los Angeles Police Department, and New York Police Department did not immediately respond to Insider's requests for comment.

"This is part of the opacity of both police departments and Clearview. We have no idea if they have to enter a warrant in order to run a query, which they probably don't; we have no idea if their queries are overseen by a supervisor," Guariglia told Insider, adding that the program is often directly loaded onto officer's phones, often without their department's knowledge or approval.

Following the Illinois lawsuit brought by the ACLU, Clearview said it would end its practice of offering free trial accounts to individual police officers.

Guariglia added: "I think we really need to ask: how strictly are the queries they put through being monitored? You live in fear all the time of a police officer pulling their phone out at a protest, scanning the faces of the crowd, all of a sudden getting their social media profiles, every picture they've ever been in, their identities — and the threat that poses to civil liberties and the vulnerability that opens up to people in terms of retribution or reprisal."

Clearview AI scraped 30 billion images from Facebook and other social media sites and gave them to cops: it puts everyone into a 'perpetual police line-up'

Law enforcement officers have used Clearview AI's facial recognition database nearly a million times, Hoan Ton-That, the company's CEO, told the BBC.

- Staff

- #4,038

- 10,437

- 7,079

- Vehicle Model

- Toyota Tacoma TRD Sport

- Body Style

- @bootyluvr hates hatches

What's the difference in this and something like a city camera posted on a street corner to monitor street flow or a red light cam or a toll lane camera?

Also, are people going to start getting in trouble if they take pictures from someone's social media account? As in, I went on a trip with a group of people and I didn't bring a camera. So I got to my friends facebook page and save the pics of the trip.

I know the above is being used by police to identify people, but really what's the difference? I guess its is a big deal since now a picture is being able to be identified as a certain person?

Also, are people going to start getting in trouble if they take pictures from someone's social media account? As in, I went on a trip with a group of people and I didn't bring a camera. So I got to my friends facebook page and save the pics of the trip.

I know the above is being used by police to identify people, but really what's the difference? I guess its is a big deal since now a picture is being able to be identified as a certain person?

- Staff

- #4,039

- 57,214

- 24,355

They’ve built a biometric database with your name/info etc from your pics. So they can take that and apply it to facial recognition. A traffic cam recording you doesn’t have your name and personal info tied to it. Well, I guess now it does if they buy the biometrics from clearview.What's the difference in this and something like a city camera posted on a street corner to monitor street flow or a red light cam or a toll lane camera?

Also, are people going to start getting in trouble if they take pictures from someone's social media account? As in, I went on a trip with a group of people and I didn't bring a camera. So I got to my friends facebook page and save the pics of the trip.

I know the above is being used by police to identify people, but really what's the difference? I guess its is a big deal since now a picture is being able to be identified as a certain person?

- Thread starter

- #4,040

does your police station use clearview? It says over it's been used by police over a million times

===============

Clearview AI scraped 30 billion images from Facebook and gave them to cops: it puts everyone into a 'perpetual police line-up'

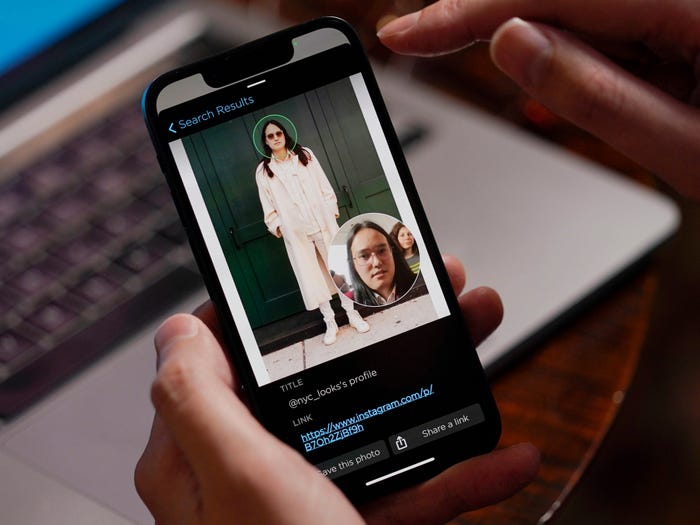

Hoan Ton-That, CEO of Clearview AI, demonstrates the company's facial recognition software using a photo of himself in New York on Tuesday, Feb. 22, 2022.

- Clearview AI scraped 30 billion photos from Facebook to build its facial recognition database.

- US police have used the database nearly a million times, the company's CEO told the BBC.

- One digital rights advocate told Insider the company is "a total affront to peoples' rights, full stop."

A controversial facial recognition database, used by police departments across the nation, was built in part with 30 billion photos the company scraped from Facebook and other social media users without their permission, the company's CEO recently admitted, creating what critics called a "perpetual police line-up," even for people who haven't done anything wrong.

The company, Clearview AI, boasts of its potential for identifying rioters at the January 6 attack on the Capitol, saving children being abused or exploited, and helping exonerate people wrongfully accused of crimes. But critics point to wrongful arrests fueled by faulty identifications made by facial recognition, including cases in Detroit and New Orleans.

Clearview took photos without users' knowledge, its CEO Hoan Ton-That acknowledged in an interview last month with the BBC. Doing so allowed for the rapid expansion of the company's massive database, which is marketed on its website to law enforcement as a tool "to bring justice to victims."

Ton-That told the BBC that Clearview AI's facial recognition database has been accessed by US police nearly a million times since the company's founding in 2017, though the relationships between law enforcement and Clearview AI remain murky and that number could not be confirmed by Insider.

Representatives for Clearview AI did not immediately respond to Insider's request for comment.

What happens when unauthorized scraping happens

The technology has long drawn criticism for its intrusiveness from privacy advocates and digital platforms alike, with major social media companies including Facebook sending cease-and-desist letters to Clearview in 2020 for violating their user's privacy.

"Clearview AI's actions invade people's privacy which is why we banned their founder from our services and sent them a legal demand to stop accessing any data, photos, or videos from our services," a Meta spokesperson said in an email to Insider, referencing a statement made by the company in April 2020 after it was first revealed that the company was scraping user photos and working with law enforcement.

Since then, the spokesperson told Insider, Meta has "made significant investments in technology" and devotes "substantial team resources to combating unauthorized scraping on Facebook products."

When unauthorized scraping is detected, the company may take action "such as sending cease and desist letters, disabling accounts, filing lawsuits, or requesting assistance from hosting providers" to protect user data, the spokesperson said.

However, even despite internal policies, once a photo has been scraped by Clearview AI, biometric face prints are made and cross-referenced in the database, tying the individuals to their social media profiles and other identifying information forever — and people in the photos have little recourse to try to remove themselves.

Residents of Illinois can opt out of the technology (by providing another photo that Clearview AI claims will only be used to identify which stored photos to remove) after the ACLU sued the company under a statewide privacy law, and succeeded in banning the sale of Clearview AI technology nationwide to private businesses. However, residents of other states do not have the same option and the company is still permitted to partner with law enforcement.

'A perpetual police line-up'

"Clearview is a total affront to peoples' rights, full stop, and police should not be able to use this tool," Caitlin Seeley George, the director of campaigns and operations for Fight for the Future, a nonprofit digital rights advocacy group, said in an email to Insider, adding that "without laws stopping them, police often use Clearview without their department's knowledge or consent, so Clearview boasting about how many searches is the only form of 'transparency' we get into just how widespread use of facial recognition is."

CNN reported Clearview AI last year claimed the company's clients include "more than 3,100 US agencies, including the FBI and Department of Homeland Security." BBC reported Miami Police acknowledged they use the technology for all kinds of crimes, from shoplifting to murder.

The risk of being included in what is functionally a "perpetual police line-up" applies to everyone, including people who think they have nothing to hide, Matthew Guariglia, a senior policy analyst for the international non-profit digital rights group Electronic Frontier Fund, told Insider.

"You don't know what you have to hide," Guariglia told Insider. "Governments come and go and things that weren't illegal become illegal. And suddenly, you could end up being somebody who could be retroactively arrested and prosecuted for something that wasn't illegal when you did it."

"I think the primary example that we're seeing now is abortion," he continued, "in that people who received abortions in a state where it was legal at the time, suddenly have to live in fear of some kind of retroactive prosecution — and suddenly what you didn't think you had to hide you actually do have to hide."

Photos can come from anywhere on the web

Even people who are concerned about the risk of their photos being added to the database may end up included through no fault of their own, both Seeley George and Guariglia said. That people may end up in Clearview's database, despite Facebook's policies against scraping or their own personal security measures, is an indicator that privacy "is a team sport," Guariglia told Insider.

"I think that's one of the nefarious things about it," Guariglia said. "Because you might be very aware of what Clearview does, and so prevent any of your social media profiles from being crawled by Google, to make sure that the picture you post isn't publicly accessible on the open web, and you think 'this might keep me safe.' But the thing about Clearview is it recognizes pictures of you anywhere on the web."

That means, he said, that if you are in the background of a wedding photo, or a friend of yours posts a picture of you together at high school, once Clearview has snapped a picture of your face, it will create a permanent biometric print of your face to be included in the database.

Clearview and law enforcement

Searching Clearview's database is just one of many ways law enforcement can make use of content posted to social media platforms to aid in investigations, including making requests directly to the platform for user data. However, the use of Clearview AI or other facial recognition technologies by law enforcement is not monitored in most states and is not subject to nationwide regulation — though critics like Seeley George and Guariglia argue it should be banned.

Representatives for the FBI, Department of Homeland Security, Los Angeles Police Department, and New York Police Department did not immediately respond to Insider's requests for comment.

"This is part of the opacity of both police departments and Clearview. We have no idea if they have to enter a warrant in order to run a query, which they probably don't; we have no idea if their queries are overseen by a supervisor," Guariglia told Insider, adding that the program is often directly loaded onto officer's phones, often without their department's knowledge or approval.

Following the Illinois lawsuit brought by the ACLU, Clearview said it would end its practice of offering free trial accounts to individual police officers.

Guariglia added: "I think we really need to ask: how strictly are the queries they put through being monitored? You live in fear all the time of a police officer pulling their phone out at a protest, scanning the faces of the crowd, all of a sudden getting their social media profiles, every picture they've ever been in, their identities — and the threat that poses to civil liberties and the vulnerability that opens up to people in terms of retribution or reprisal."

[/URL]

Nope.